The Forbes article ,written by Joanna Peña-Bickley, the Global Chief Creative Officer at IBM, was laying the groundwork for her talk 48 hours later at the Tribeca Film Festival titled “Cognitive Storytelling in 4D – How Would Orson Welles Tackle It?” Pointing back to Welles’ live radio stunt The War of The Worlds, which convinced many people listening that the Earth was being invaded by Martians, Peña-Bickley establishes that event as transforming radio from a storytelling medium to an experience medium. Claiming that today we live in the “cognitive era,” she went on to highlight many technologies, such as AI, Machine Learning, Generative Art, and virtual reality, as creating new storytelling experiences.

There is no shortage of hype around many of the technologies highlighted by Peña-Bickley. Augmented Reality and Virtual Reality (AR/VR) were among 33 CES technology categories this year, with 261 exhibitors — the largest showcase of AR/VR technology ever. Given how long VR has been around (some track its origins back to Stereoscopic photos and viewers in the early 1800s) many would argue that the technology is just now becoming viable from a consumer perspective. If it has taken VR this long get its sea legs….how real is this notion of Cognitive Storytelling?

Obviously, it’s in IBM’s interest as a global branding strategy to preface as many nouns as possible with the word ‘cognitive’ and lay claim to it. As an observer who’s in the business of storytelling, I wanted to get a better understanding of IBM’s definition of Cognitive Storytelling, and how Watson’s work is being applied to our craft. Moreover, given the amount of talk in the Wealth Management Industry regarding Robo-Advise, I thought it would be interesting to explore how Artificial Intelligence is impacting the entertainment industry.

In 2011, Watson started out as a single natural language QA API. Today the platform consists of more than 50 technologies. In both her Forbes article and her Tribeca talk, Peña-Bickley urged today’s storytellers to consider Watson for future endeavors.

I checked out Watson’s Developer Cloud to get an idea which of its APIs would make up a cognitive storyteller’s toolbox. Below are just a few and IBM’s descriptions of what they provide:

Natural language processing for advanced text analysis

AlchemyLanguage is a collection of natural language processing APIs that help you understand sentiment, keywords, entities, high-level concepts and more. You can use AlchemyLanguage to understand how your social media followers feel about your products, to automatically classify the contents of a webpage, or to see what topics are trending in the news.

Natural language interface to your application to automate interactions with your end users.

Watson Conversation combines a number of cognitive techniques to help you build and train a bot -- defining intents and entities and crafting dialog to simulate conversation. The system can then be further refined with supplementary technologies to make the system more human-like or to give it a higher chance of returning the right answer. Watson Conversation allows you to deploy a range of bots via many channels, from simple, narrowly-focused bots to much more sophisticated, full-blown virtual agents across mobile devices, messaging platforms like Slack, or even through a physical robot.

Understand personality characteristics, needs, and values in written text

Personality Insights extract personality characteristics based on how a person writes. You can use the service to match individuals to other individuals, opportunities, and products, or tailor their experience with personalized messaging and recommendations. Characteristics include the Big 5 Personality Traits, Values, and Needs. At least 1200 words of input text are recommended when using this service.

Enhance information retrieval with machine learning

Retrieve and Rank can surface the most relevant information from a collection of documents. For example, using R&R, an experienced technician can quickly find solutions from dense product manuals. A contact center agent can also quickly find answers to improve average call handle times. The Retrieve and Rank service works "out of the box," but can also be customized to improve the results.

Understand tone and style in written text

Tone Analyzer uses linguistic analysis to detect three types of tones in written text: emotions, social tendencies, and writing style. Use the Tone Analyzer service to understand conversations and communications. Then, use the output to appropriately respond to customers.

Understand Image Content:

Visual Recognition understands the contents of images - it's learned over 20,000 visual concepts including faces, age and gender. You can also train the service by creating your own custom concepts. Use Visual Recognition to detect a dress type in retail, identify spoiled fruit in inventory, and more.

Rapidly build a cognitive search and content analytics engine and add it to existing applications with minimal effort.

Extract value from your data by converting, normalizing and enriching it with integrated Watson APIs. Combine proprietary data with pre-enriched news data. Use a simplified query language to explore your data and embed Discovery into existing applications.

Make better choices with a full view of your data

Tradeoff Analytics helps people make decisions when balancing multiple objectives. When you make decisions, how many factors are considered? How do you know when you’ve identified the best option? With Tradeoff Analytics, you can avoid lists of endless options and determine the right option by considering multiple objectives.

Embed cognitive functions in various form factors such as spaces, avatars or other IoT devices.

Project Intu is a new, experimental program from IBM enables developers to imbue Internet of Things (IoT) systems — robots, drones, avatars and other devices — with the cognitive know-how of Watson. The program makes it so that developers don’t have to program each individual movement and response of an IoT system. Project Intu provides the device with Watson conversation, speech-to-text, language and visual recognition capabilities, among others, so that it can respond naturally to user interactions. It can even trigger different behaviors or emotional responses based on what the user does.

Without question, IBM has configured a rich array of APIs that tap into Watson's AI. Lets explore how storytellers have been utilizing this technology in their own works.

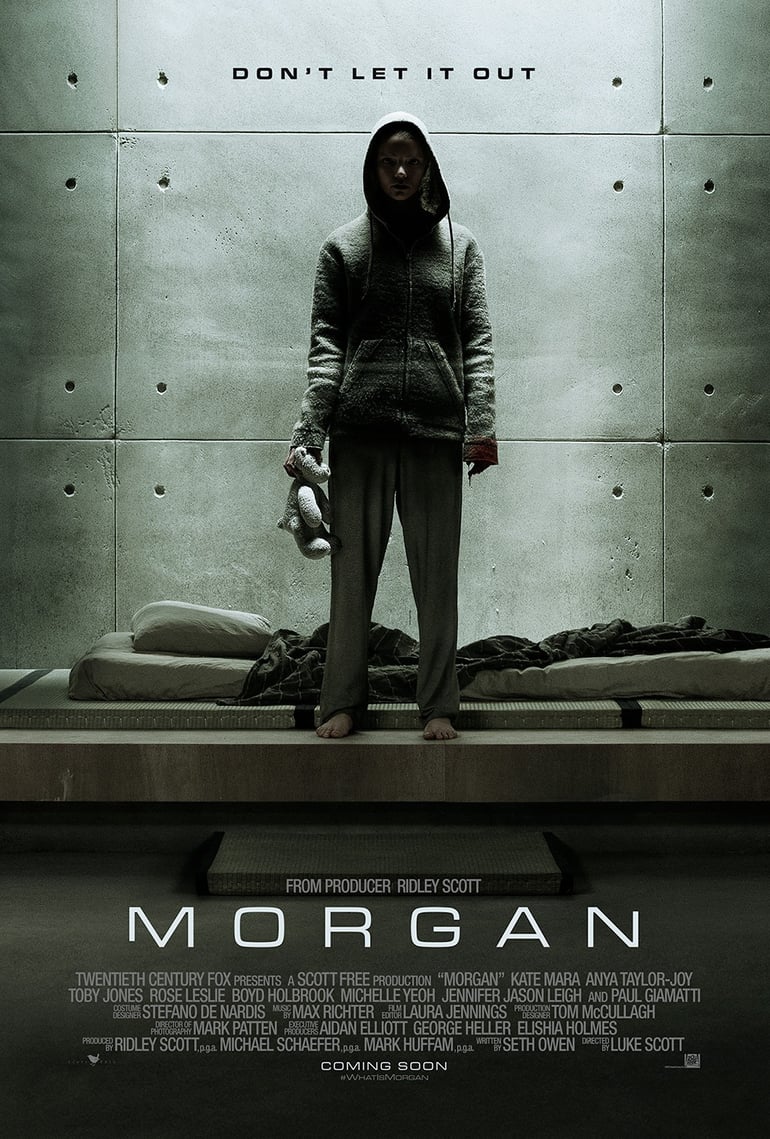

MORGAN

Morgan, a sci fi AI thriller starring Kate Mara and Paul Giamatti, is a film directed by Luke Scott, written by Seth Owen, produced by Ridley Scott, and Distributed by 20th Century Fox. Fox approached IBM to utilize Watson to create the film’s trailer. Watson consumed the entire video and conducted sentiment analysis on the different points within the movie, and curated the most impactful moments of the film.

The end result:

Watson Trailer and Making-Of Video

Now here is the official trailer for the movie (2:24 minutes):

In my opinion, the official trailer is far superior from a storytelling persepctive. In fairness to Watson though, it was 70 seconds longer, allowing the plot and core themes to shine through. What is notable about Watson's output is that it was produced in only 24 hours! Moreover, IBM highlighted that Watson choose scenes "that would never be considered by humans, focusing instead on mood and atmosphere." And with that, IBM's AI does deserve some level of recognition. Watson hit the creepy ball out of the park.

WATSON APIs USED: Tone Analyzer, Alchemy Language

NOT EASY

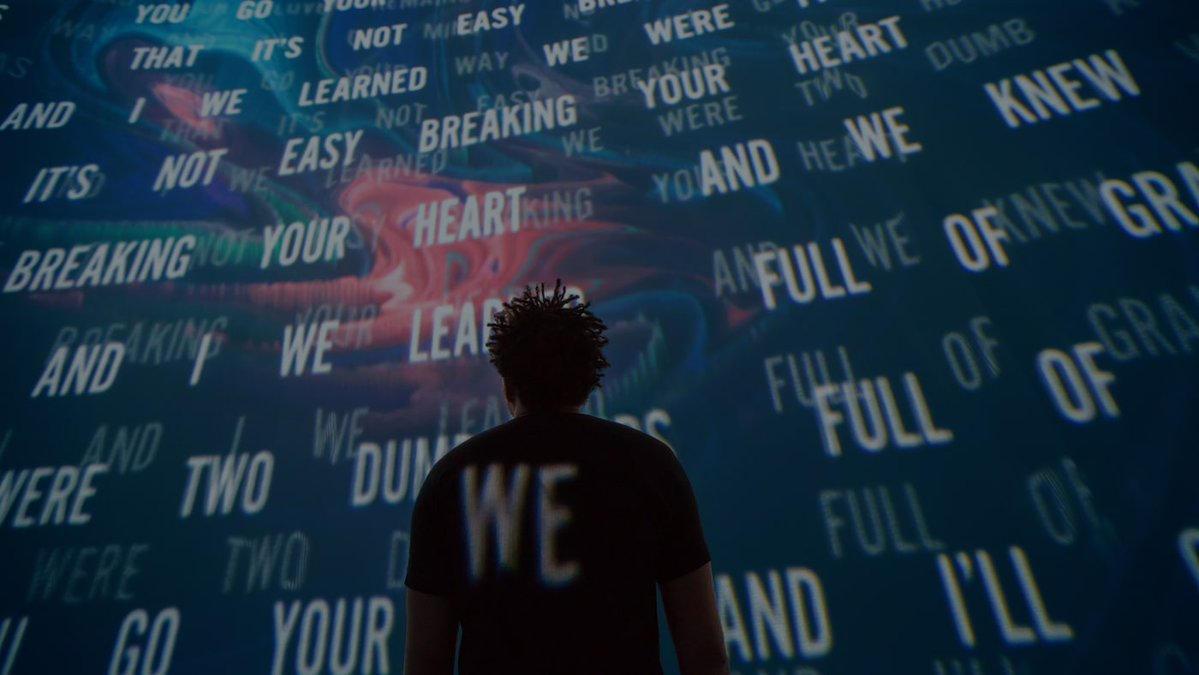

Rolling Stone recently highlighted an interesting collaboration between IBM’s Watson and Grammy award-winning music producer Alex Da Kid on his new song titled Not Easy.

Watson jumped into the project brain-first by analyzing five years' worth of cultural data – from news headlines and court rulings to movie synopses and internet searches – and identified themes and trends, and people’s emotions surrounding them. Watson also analyzed the lyrics and composition of 26,000 recent popular songs, determining ubiquitous emotions as well as common patterns in song structure.

Watson’s output was an enormous amount of data. In order to make the information accessible to the artist so that he could apply the learning to his songwriting, Janani Mukundan (IBM Research, Watson BEAT) and others at IBM sat down with Alex to “figure out how he understands information and emotion.” He told them he sees music in color.

IBM took that insight and built Alex a room with large screens visualizing Watson’s data by associating it with colors, patterns, words, and textures. This assisted Alex during the inspiration phase of his songwriting. Watson further contributed to Alex’s music-making process by giving him a prototype of Watson BEAT, the brand's cognitive system that recognizes and interprets music theory and emotion.

“It understands and deconstructs music and then allows artists to change it based on the moods that they want to express," says Richard Daskas (IBM Research).

"[Alex] would listen to [a piece of music] on the keyboard or have one of his musicians play on a keyboard or play it on a drum," Mukundan recalls. "Watson will listen to this and scan it. He can also tell Watson, 'Give me something that sounds romantic, or give me something that sounds like something I want to dance to.' And since Watson understands these emotional ranges and can understand music as well, he will then use his original piece as an inspiration and also add on top of it the layer of emotion that he wants."

The end result:

According to IBM, more artists like Alex Da Kid will collaborate with Watson to “...make hits from volumes of data. Watson gives artists the tools they need to see inspiration in places they never could before.”

WATSON APIs USED: Tone Analyzer, Alchemy Language, Watson Beat, Cognitive Color Design Tool

IBM and ad agency Olgivy & Mather organized the world’s first artistic collaboration between artists and machine. They introduced six leading designers, illustrators, photographers and artists to Watson’s APIs. These artists each interpreted a Watson API as an original composition. Then, IBM asked Watson to process the artists’ compositions. The outcome was a poster series released in March of 2016. The series was turned into an online experience and multimedia exhibit at Astor Place in New York City.

The end result:

Stories are how we connect with other human beings and make sense of our world. It goes without saying that the entrance of a non-human actor to this stage may seem a bit unnerving. A large part of that perception may be credited to pop culture painting AI in a dystopian corner, so to speak. Stories of humans threatened by ‘robots’ have roots as old as the Bible, in which dry bones come to life in the Book of Ezekiel.

None of the three examples I cite here, however, was a story of Watson crushing its servile human creators. Instead, in all cases, Watson served as a supporting player. IBM’s big brain may have helped inform and/or inspire artists, but in the end, each of their stories got their uniqueness as a result of a distinctively human effort.

This echos many of the same observations about how important the human element is regarding robo-advice. In a White Paper published by Accenture titled "The Rise of Robo-Advice" they stated:

There are parts of the client-advisor relationship—such as reassuring clients through difficult markets, persuading clients to take action and synthesizing different solutions—that should remain the province of the financial advisor for the foreseeable future. It is therefore essential to develop a unified client-advisor experience that seamlessly brings together the best of human and robo-advice capabilities. Understanding where robo-advice can complement and enhance relationships will be key for most full-service wealth management firms.

AI-generated stories that connect on a human level still seem to be a ways off. According to David Kenney, general manager of IBM Watson, he hopes one day to see Watson “ask humans questions and to develop abductive reasoning as opposed to deductive skills.” Even still, knowing how something happened or knowing that something happened is not the same as having the experience of something happening, which is critical to storytelling.

Today, Watson’s imaginative application seems to be limited to seeing possible formal narrative connections via logical or direct arguments. AI does not seem to have the ability to produce a transformative experience, which is what stories do. In other words, it is not yet capable of generating compelling stories, gripping drama, and/or believable historical accounts (true or otherwise) on its own. For now…

You May Also Be Interested In Reading: Your Guide to Becoming a Cyborg Advisor

Article first appeared in:

E R G O | Journal of Organizational Storytelling | Volume 2 . Issue 1 . 2017

These Stories on Industry Trends